In this blog post, I will demonstrate how duplicate detection works in azure service bus queues.

Pre-Requisites

____________

Click Create. Make sure you select pricing tier standard and above. Service namespace is created below.

2. Create Queue under the service bus namespace created in step 1.

Selection "Enable Duplication Detection" checkbox. and Specify duplicate detection timeframe window. (Incoming messages during these duration will be rejected in case of duplicate).

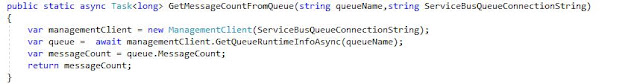

5. Add a method to count Messages in queue.

Pre-Requisites

____________

- Azure Subscription

- Azure Service Bus namespace created in Standard Pricing tier or above. Duplicate detection doesn't work with basic pricing tier.

- Azure Service Bus Queue with Duplicate Detection enabled

2. Create Queue under the service bus namespace created in step 1.

Selection "Enable Duplication Detection" checkbox. and Specify duplicate detection timeframe window. (Incoming messages during these duration will be rejected in case of duplicate).

I have specified duplicate detection window as 5 minutes. We will try to send multiple messages with same MessageId property and verify how many messages will get pushed actually to service bus queue.

Click Create.

3. Now we will create a console application that pushes 10 messages with 30 second interval. i.e. 10 messages will be sent to queue within 5 minutes. all messages will be assigned with same MessageId property.

4. Add following Nuget Packages to your console app.

using Microsoft.Azure.ServiceBus;

using Microsoft.Azure.ServiceBus.Management;

6. Add following in the main method.

7. Press F5 to run the application and see how many messages get actually inserted to queue.

8. We can see, even though we sent ten messages to Queue, message count is 1. As all Messages were having same MessageId so other 9 messages were rejected by Service Bus Queue.

We can even verify from Service Bus Explorer, that only one message is received in queue.

Conclusion

Service bus with Duplicate detection enabled receiving messages within specification duplication time window will reject messages in case same MessageId property is received for multiple messages. Only messages will unique MessageId will be retained in the queue. Code can be found at (https://github.com/yogeetayadav/SbDuplicateDetection)